Introduction to edge computing

What is the edge?

Corporate technology leaders and software vendors alike are facing a daunting fact: not all enterprise software will be able to run in the cloud. The cloud must be augmented with edge computing.

Basic definition

What is edge computing? It should be a straightforward answer. Unfortunately, a lot of vendors are adopting the name “edge” for their products, even when it is not really applicable. That has created some confusion. We will try to cut through that confusion. The most basic definition is:

Edge computing is the computing that is not in the cloud or the data center.

That is a start, but it leaves a lot of questions. Is my laptop edge computing? What about my smartphone? What about the internet of things? Is the fancy smart thermostat in my house edge? I hear people refer to regional data centers as “edge”. Is that right?

Different edges

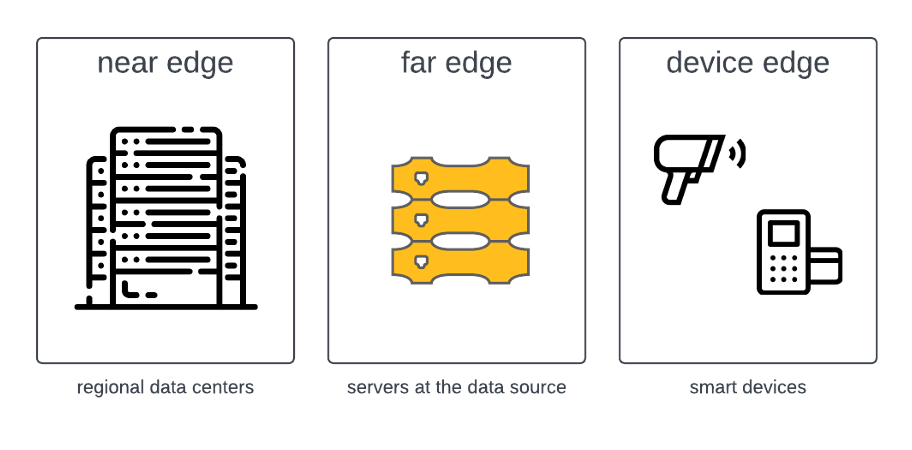

To answer these questions and to differentiate the elements of edge computing, industry has settled on three terms: near edge, far edge and device edge.

Near edge means anything using 19 inch rack mounted equipment for edge computing. Rack servers are the big pizza box servers that go in data centers. So, a data closet or small server room would be considered near edge. Some data centers are branding themselves as “near edge.” Perhaps the big server companies named it near edge because it is near and dear to their heart. They love their rack mounted form factors.

Device Edge is all those smart things out there, the internet of things (IOT). Every new piece of equipment has a computer in it, and that computer has software. The computers in these things get more and more powerful, and the data they generate gets larger and larger. But they are always just the one thing.

Which brings us to the third type of edge, far edge. Or what we at Hivecell refer to as “real edge” or simply edge. Far edge is servers that run outside of a data closet or data center, as close to the source of the data as possible.

Historical context

To gain clarity, we will extend our definition of edge a little further. To do so, we need some historical context.

When computers first arrived in the 1950s, they were giant, centralized monsters. Mainframes, the descendants of these first computers, are still with us to this day. They had lots of users, central management of resources, sophisticated software deployment update processes, thin clients. That sounds a lot like cloud, does it not?

Starting in the 1970s, a process of decentralization began, starting with mini-computers and eventually leading the personal or desktop computer. How we built and deployed software for PCs was very different than mainframes.

Starting in the 1990s, the pendulum started to swing back again. We evented client server architecture for enterprise applications, and then three-tier architectures. Applications moved from the desktop to the closet server and then to the data center. Nevertheless, the processes for building and deploying software were still nearly the same as those created for PCs.

With the advent of the web, the consolidation accelerated. With the advent of cloud computing, the consolidation was nearly complete. In particular, the development and deployment of software was now very different. Microservice architecture and continuous integration, continuous deployment (CI/CD) were simply impossible in the 1990s but now industry standard practices.

Now we are seeing the pendulum swing back the other direction again. Eventually, edge computing will be bigger than the cloud, just as desktop computing was bigger than mainframes decades before.

More accurate definition

Which brings us to a more accurate definition. Edge computing is not a return to closet servers like we had in the 1990s. I hear companies say all the time, “We have been doing edge for years.” No, you have not. You have been doing pre-cloud computing for years. It is not the same thing at all.

A more accurate definition is:

Edge computing is the computing that is not in the cloud or the data center but is managed and monitored with tools and processes like those used in the cloud and data centers.

In this handbook we will explore the details and implications of this definition in detail.

Reasons for edge

There are several reasons for edge computing; the most notable are autonomy, bandwidth, cost, security, compliance and latency.

Autonomy

Business continuity and system safety demand local processing of data to avoid possible network outages. There will always be network outages. Storms blow down power lines. Workers sever cables while digging. There is also the failure of cloud services to consider. Even Amazon Web Services, as reliable as it is, stops working sometimes. Remember the S3 outage in 2017? With reliable edge computing, you may still face disruption in business, but it will not be because the network was unavailable.

Bandwidth

When we first began discussing the internet of things, most of us just assumed that all the data from these smart things would go to the cloud. We grossly underestimated how much data all the things would produce. Some data is so large that it is not feasible to move it from its source to the cloud. For instance, there are 30,000 sensors on a typical oil rig, but it would take 12 days to transport just one day of data to the cloud. As a result, most of this data is going unused, unanalyzed, just falling on the ground as it were. The key is to do initial analysis of this data at the edge and send the valuable data to the cloud.

Cost

Moving very large amounts of data across the internet and storing it in the cloud can be expensive. The cost is especially vexing when the raw data does not actually provide any value. For instance, suppose you have a camera feed that you analyze with a machine learning model to detect certain events. The events may be unsafe operation or changes in inventory. The video data itself has no value. Only the events detected by the model have business value. Why pay to ship and store the raw video data to the cloud when you could instead just send the events? Sure, one video feed may not be that costly, but an enterprise may have dozens of such video feeds at each of a thousands locations. The numbers add up quickly.

Security

Some data is considered too sensitive to move across the internet, even if encrypted and in a virtual private cloud (VPC). It is not just military installations, power plants and financial institutions that face such security challenges. For instance, the valuable assets of a media company are entirely digital. Is it prudent to trust the entire value of the company to a third party? Sometimes cloud is simply not an option.

Compliance

There are regulations in many countries that dictate where and how data can be stored which prevents use of the cloud. The storage of personal identifying information, financial transactions and especially healthcare data are all increasingly regulated. Consider that there are 54 countries in Africa with 1.2 billion citizens, yet there is not a single AWS cloud facility on the entire continent. Where are those countries going to store and process the healthcare data of their citizens?

Latency

In some situations the network latency of moving data to the cloud and back again is impractical or even dangerous. Data cannot move faster than the speed of light, which is 186,000 miles per second. That sounds very fast, but it equates to 1 millisecond of delay for every 60 miles. Distance is not the only factor. The switching between routers across the network can be far more significant. Round trip messaging on a cellular network may take 200 to 800 milliseconds. That delay may go unnoticed when reading a web page, but it is intolerable for machine decision making or augmented reality. Any delay more than 50 millisecond makes AR intolerably jittery.

Cloud is not enough

Cloud cannot meet every computing need. Edge computing is now a real and pressing requirement for many enterprises. The reasons for edge computing include autonomy, bandwidth, cost, security, compliance and latency, but there are more. It should be noted, however, that edge computing does not eliminate the need for data centers or the cloud. Far from it. Edge computing complements existing centralized compute power. Each enterprise must find the balance between edge and cloud computing that meets its unique circumstances.

- Autonomy : business continuity cannot tolerate outage

- Latency : time delay to cloud is impractical or dangerous

- Bandwidth : data is too large to move to cloud

- Cost : network and cloud storage of data is too expensive

- Security : data too sensitive to move to cloud

- Compliance : regulations against moving data to cloud

Why we like the cloud

If the cloud taught us anything, it is that nobody wants a server; we want what a server provides for us, which is software. We want the application. The server is just a necessity, a means to an end. Today, because of the cloud, if you want an application, you open a browser on your laptop, type in a web address, push the “Create account button” and boom, you have your application.

Technology leader experience before cloud

Now, compare that experience with what was necessary in the 1990s. First you had to buy a server. If it was a multi-tier application, then you had to buy multiple servers. If you needed disaster recovery, you had to buy more servers for the DR site.

The server was a capital expense. It had to be depreciated over a three year period. Which meant you were stuck with the server you bought day one for three years. Which meant you had to calculate what your server load would be three years from now and buy for that size. Good luck with that.

Then you had to buy and install an operating system. Then you had to buy and install a database. Then, and only then, could you install your software. Of course, every software had a list of dependencies. The install process would fail with, “Missing Utility X.” When you tried to install the utility, it would fail with “Missing library Z version 2.5”. And on and on. After all that, you would finally have your application.

But it didn’t stop there. Every six to 12 months you would have to upgrade the software. Which may need an upgrade to your operating system, your database, maybe even your hardware. Oh, the joy.

It’s not that the cloud has dramatically decreased our costs. It has dramatically decreased our pain. As a technology leader, you can focus on what you want, which is the application, and not worry about servers, operating systems, databases, or updates. In fact, instead of waiting months for changes, you have them continuously, with no effort on your part. That is why technology leaders love the cloud.

Software vendor experience before cloud

As bad as it was for the customer before cloud, one could argue it was just as bad or worse for the software vendor. He had to write his software to support all kinds of hardware, different operating systems, different databases. All those exceptions made the software much more complicated. The vendor was on the hook to support the software in the customer’s environment, an environment which the vendor had little to no visibility, let alone control.

Changes to the software could only be released every few months because of the impact it had on customers. Sometimes customers would refuse to upgrade for years, forcing the vendor to support multiple versions of the same application.

Every year, the vendor had to go hat-in-hand to beg the customer to renew his support contract. There would be haggling and last minute, end of the quarter price slashing. There was the added problem of knowing exactly how much software the customer was actually using. What a mess.

Cloud improvements to enterprise software

The cloud changed the scenario for the software vendor. The software is now deployed in an environment the vendor controls. He only needs to support one environment, his own. That means that a huge part of the cost of running a software company, the support team, shrinks dramatically. The software vendor can also modify and upgrade the environment at will. Which means he can deploy software updates at will. There is no continuous delivery without the cloud.

The vendor now has complete visibility into how his customers use his software. And here is the best thing of all (for the software vendor, that is): he can sell his software as a service and establish a continuous revenue stream.

So, the cloud is great. It removes pain from the customer and the vendor. Everybody is happy. Except. Except now we know not everything can run in the cloud. We now face the challenge of edge computing. And nobody wants to go back to the way it was before. That is the challenge that edge computing must solve.

Characteristics of the edge

Edge is not a data center. Here are six key characteristics of edge environments:

- No data closet

- No technical staff on site

- Hundreds or thousands of locations

- Limited or intermittent network bandwidth

- Behind someone else’s firewall

- Demand for compute power always increasing

For some of you, this will be validation. You are not alone. Many others face the same challenges. For others, such as IT professionals who have spent their career in the data center, this will be eye opening.

No data closet

As mentioned earlier, industry is using the term “edge” rather loosely. Some call regional data centers “near edge”. Hivecell is focused on “far edge” or real edge computing. So the first characteristic of an edge computing environment is: there is no data closet. Even if there is a data closet, it was full, underpowered and overheating long ago. There is no room for more servers.

The photo above is a typical edge deployment environment. It is the engine room of a cargo ship. You will see pretty much the same thing on a factory floor, in a job trailer, in a quick service restaurant, a retail store, a warehouse, a clinic, etc. In other words, the real edge.

There is no uninterrupted power supply (UPS), no top of rack router. Sometimes there is no air conditioning. This is not the sort of place you put a $20,000 server.

No technical staff

Second characteristic of edge: there is no technical staff. For the IT department working in the data center, this is hard to grasp. You see, they operate in a centralized controlled environment surrounded by their peers. They know how to shell into a server, configure a network routing table. Doesn’t everybody? No, they don’t. The most basic knowledge in a data center, the stuff the summer intern should know, is rocket science to anyone else. So how things get done, get installed and deployed in the data center is just not going to work at the edge.

Hundreds or thousands of locations

Third characteristic of the edge: there are hundreds or thousands of locations. Some customers have tens of thousands of locations. A DEVOPS guy, someone who manages deploying enterprise applications, he used to a handful of deployment locations. There is a DEV environment that the programmers use. There are UAT and STAGE environments for testing. There may be regional production environments, but all total? You can count them on your hands.

A simple manual process is feasible in the data center. It takes five minutes, no big deal. With edge, however, that five minutes for 1,000 locations becomes 5,000 minutes. That’s 83 hours. That’s more than 10 working days. That’s a big deal.

It is the difference between pets and cattle. Traditional enterprise software has pet servers. You name your pets. You know when they ate, what they ate. Edge computing is a herd of cows. They don’t have names, they have tags. You feed them in mass.

We will discuss more about edge scale later.

Limited or intermittent network

The fourth characteristic of edge computing: limited or intermittent network connectivity and bandwidth.

In one deployment of Hivecells, the customer had a remote cluster connected to the Internet through a satellite modem. Someone parked a piece of heavy equipment that blocked the line of sight to the satellite for six days. Retail stores do not have such an extreme situation, but local outages are a reality. Large storms and even construction accidents can knock out communications for hours or even days.

Management systems for data centers do not anticipate limited or intermittent network connectivity. Some systems expect 20 GB connections between servers. That is a ridiculous expectation at the edge.

Behind someone else’s firewall

Data center management systems such as VMware VCenter make a simple assumption: the management software is behind the same firewall as the servers it is managing. This assumption is perfectly logical for a data center. It can be made to work for virtual private clouds (VPC). However, it is completely illogical for the edge.

These systems require dozens of ports be open to connect and administer the server. Creating such a large hole in a firewall is unacceptable. Creating, maintaining and monitoring the required holes in hundreds or thousands of locations would be a huge undertaking. Read: expensive.

In reality, however, it is impossible task, because quite often in edge deployments, you have no control over the firewall. Someone else owns it. For instance, for franchised retail, the franchiser wants to deploy edge computing in each store but the various franchisees own the network and firewall of those stores.

Demand for compute power is always increasing

The last notable characteristic is that the demand for edge compute power at the current moment is relatively small. Once deployed, however, the demand will increase continuously. There will be another application, and another and another. New unanticipated use cases will follow one after another. The data will grow and grow. There will be more smart devices, more machine learning models, more everything.

When planning edge computing, customers need to consider how they will incrementally increase their edge infrastructure. The cost of the initial deployment is just the beginning. Edge infrastructure will need to start small and scale quickly.

Expectations of edge computing

Based on these edge environment requirements, customers have clear expectations for edge computing that are different from the cloud or a data center.

- No special equipment for power, air conditioning or networking

- No technicians necessary onsite to install and configure

- Start with smallest footprint possible to demonstrate ROI

- Easily add more compute power as workload expands

- Run distributed software for containers, messaging and machine learning with high availability

- Monitor, manage and upgrade centrally, even when behind a firewall

- Can apply continuous integration, continuous deployment (CICD) developer operations (DEVOPS)

What customers want is all the good stuff of the cloud, just running on premise, that is, at the edge.

We must not forget, however, that the edge is not the cloud. There are real physical limitations that prevent the edge from being exactly like the cloud. The biggest challenge, no pun intended, is the concept I call “edge scale.” Nevertheless, we can build solutions that meet the customers’ expectations for edge computing. It will not be achieved by simply shoving data center solutions on premise. It will require innovation.