Edge in manufacturing

Use cases

There are two primary types of use cases in edge computing, greenfield and brownfield. Greenfield are new use cases. We are building new types of applications with new technologies to solve problems that were previously unresolved. Brownfield, on the other hand, are old use cases. We are improving existing applications by porting them to new technologies.

Greenfield

Greenfield use cases for edge computing include predictive maintenance, augment reality, autonomous mobile robots, safety and digital twins. All of these use cases require edge computing to address bandwidth, latency or security constraints.

- Machine learning enables new breakthroughs in predictive maintenance. ML models can monitor vibration, sound and other inputs to determine if a machine needs attention before it fails.

- Augment reality can vastly improve worker efficiency in several tasks, including equipment repair and inventory location.

- Autonomous mobile robots have already demonstrated significant improvements in inventory movement efficiency. Expect to see them everywhere.

- Computer vision models can provide increased safety. These models can detect instantly if employees are wearing the correct protective equipment. They can warn when people or equipment move into dangerous areas.

- Digital twins provide real time awareness of the status of all equipment in the factory. More importantly, digital twins enable analysts to conduct what-if scenario planning and testing.

Brownfield

Brownfield use cases for edge computing include human machine interfaces (HMI), automated inventory and overall equipment effectiveness (OEE).

- Anyone familiar with manufacturing is familiar with antiquated HMI displays. Clunky, ugly software that is not intuitive or friendly. With the web and smart phones, user interfaces have made great strides in the past years, and our expectations have increased along with them. Edge computing enables the deployment of new touch screen tablets with the latest UI designs.

- RFID had been around for years for automated inventory. There are now other ways to track inventory with IOT, such as shelf sensors and scales. With ML, we can deploy computer vision applications that can monitor Kanban inventory levels.

- OEE is no longer a printed report analyzing the past week or month. Modern OEE combines and analyzes operator inputs, machine data and inventory movement to provide accurate, near real time visibility of your factory floor.

OT versus IT

There is a gulf between operational technology (OT) and information technology (IT). Both groups deal with similar things, software, computers, automation, so one would expect them to be the same, but they are not.

In IT new technologies are encouraged. Everyone wants the new monitors and laptops, the latest version of office software or database. In fact, continuous update is now the norm. Everyone in the company uses IT and it is common from company to company, even industry to industry. Using a human resources or accounting application is pretty much the same whether you are in manufacturing or retail.

OT differs from IT in three particular ways:

- stability

- security

- knowledge

We will discuss each of these three ways.

First, in OT stability trumps innovation. The factory must operate. Downtime has serious consequences. Innovation in OT is perceived as high risk while in IT not innovating is perceived as a risk. As such, innovation in OT proceeds very deliberately or is avoided entirely. A shop manager may ask, “Can you guarantee that the change will have no adverse effects?” No one can be 100% certain, so the change is blocked.

Second, security has much higher perceived consequences in OT than in IT. A hacked piece of machinery could maim or kill someone. There are standards for separating networks on the factory floor from the Internet. Nevertheless, the security concerns can be taken to extreme. The perceived risk all too often outweighs the tangible improvements. The punishment of failure trumps the reward for success.

Third, few people use OT and even fewer are responsible for maintaining it. On many factory floors, teams are unwilling to touch a piece of automation because anyone who knew the system is retired. It works; leave it alone. At its extreme, OT appears as a sort of mystery cult, hidden behind walls of obfuscation and a zealous priesthood. Most OT professionals have not kept pace with the latest IT technologies. They are highly valued for knowing the legacy OT technologies. To be fair, is it logical for them to welcome changes that bring in technology they do not know and make obsolete the technology they do?

The culmination of these three factors is that the gulf between OT and IT has grown wider and wider. No one in IT knows what a MODBUS is. No one in OT knows what NodeJS is.

Democratize the data

The gulf between IT and OT is what is blocking the promise of the industrial internet of things (IIOT) and Industry 4.0. The people who know how to access the data, the OT team, know nothing about the new technologies such as machine learning and event source architecture. The people who know these new technologies, the IT team, know nothing about how to access the data. In fact, they are allowed nowhere near it for fear they will break something. To the OT team’s credit, that fear may be justified, but still blocks progress.

To move forward with Industry 4.0, we must democratize the data. That is, we must make the data produced on the factory floor available to everyone, both OT and IT, without compromising operations nor security. In the following sections we will present how to achieve this goal.

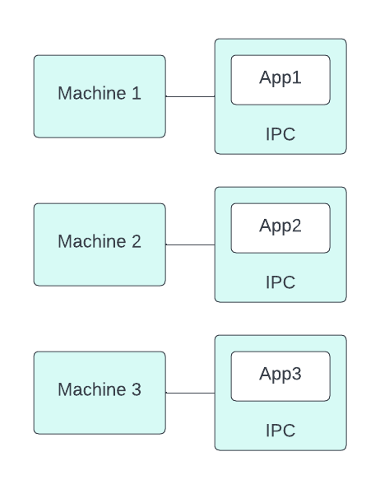

Migrating to edge

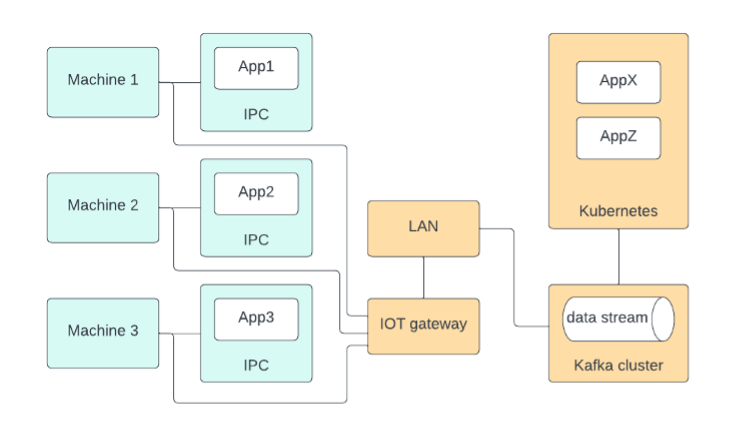

All modern equipment on the factory floor has digital inputs and outputs. The digital IO is connected to programmable logic controllers (PLC). The PLCs connect to an industrial personal computer (IPC) for human machine interface (HMI) either through a serial port or through a legacy serial bus protocol such as MODBUS.

I spell out all these things because to the OT team they are everyday terms but to the IT team they are completely foreign. If you say “IPC” and “HMI” it means nothing. If you say a tower PC and a touch screen, they know what you mean.

There are hundreds, even thousands of IPCs on a factory floor. These IPCs are usually Microsoft Windows workstations. They are rarely updated. You might find Windows 95 running. It is manually intensive to update all these stations.

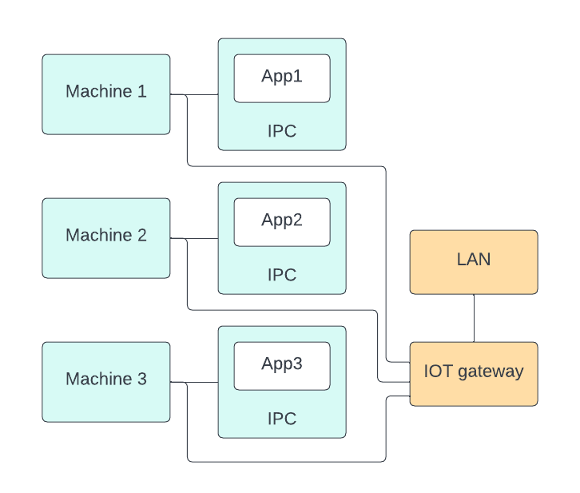

Add IOT gateway

The first step data democratization is to add an IOT gateway. The gateway does nothing more than bridge from legacy protocols to Internet protocols. So instead of straight serial ports or MODBUS, the data is available through TCP/IP on a local area network.

That is a big first step, but it is still not enough to make the data useful to the IT crowd.

A common question is: why can’t I just run my analysis on the IOT gateway? It’s a computer after all. True enough, but it is a computer with limited resources. Whatever analysis you wish to deploy now, you will want to add more and more. You will quickly exhaust the resources of the gateway. More importantly, your analysis will start to constrain resources needed for the gateways primary purpose, which is to protocol translation. The gateway should focus on that one purpose and leave the analysis to other platforms.

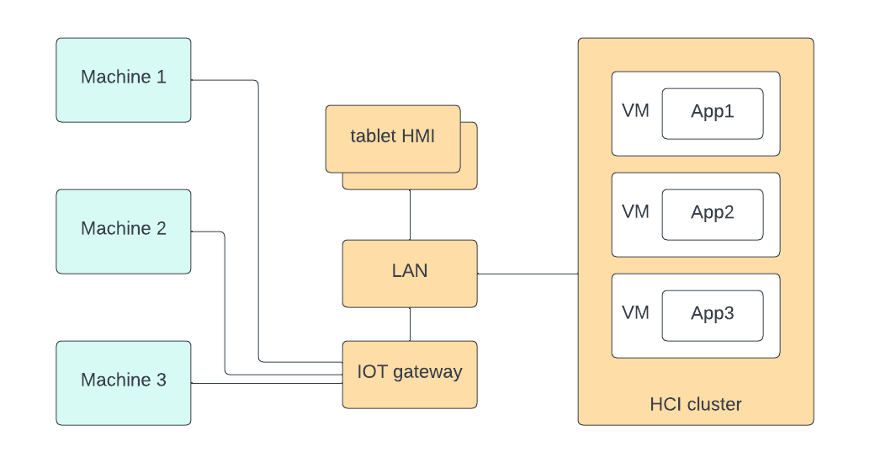

Port applications

Once the machine data is available on the network, it is possible to move the applications that are on the IPCs to virtual machines (VM) running on a hyperconverged cluster (HCI). This move makes managing, monitoring and updating the applications much easier. It also enables the applications to become more reliable. If an IPC fails, it fails, but if an VM fails it can be spun up automatically in the cluster. It also enables new HMI such as touch tablets.

Add data stream

The next step is to add a data stream for event sourcing such as Kafka. This step can run in parallel or sequence to moving the IPCs to VMs.

Once the data is in a event source stream, data scientists and application developers can read the data in a format that is familiar to them.

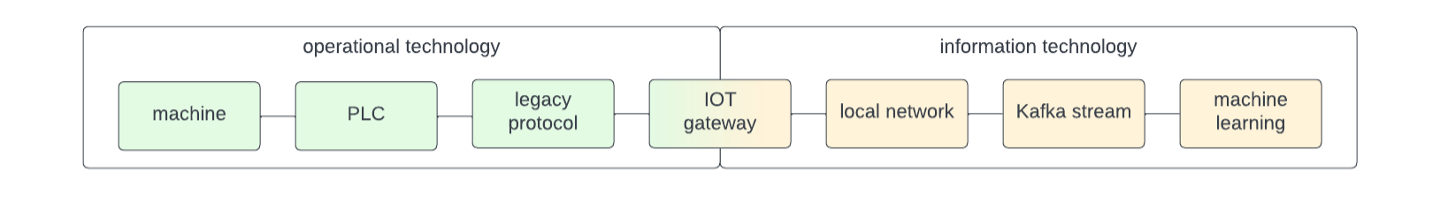

The connectivity of the system from OT to IT is shown in the following figure. It does not mean that OT is handing off management of this infrastructure to IT. Instead, it means OT can start using the technology currently used in IT.

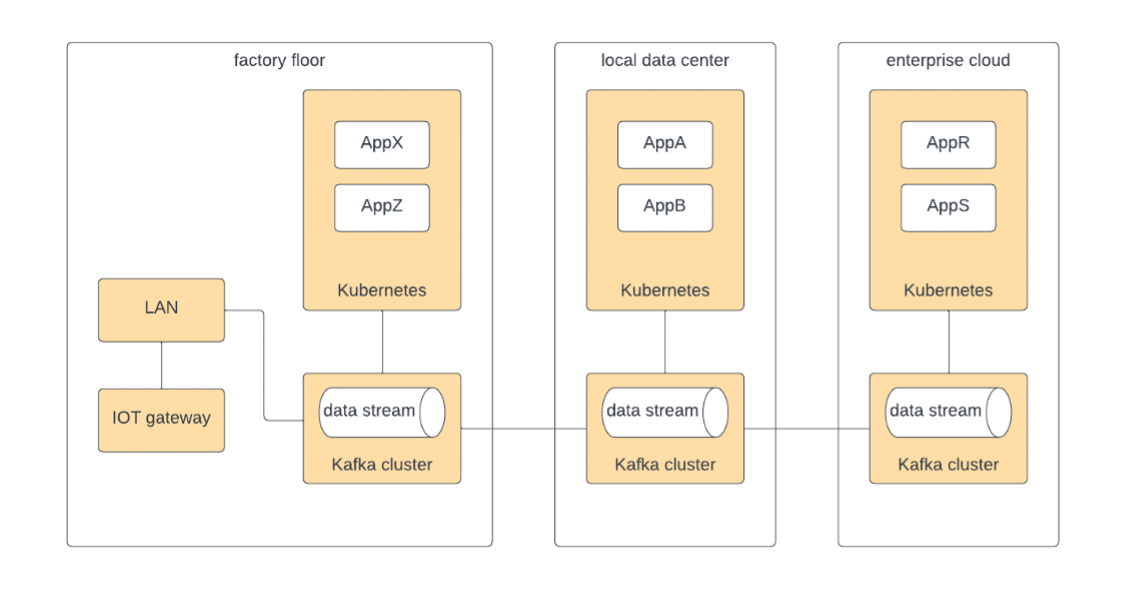

Extend the data streams

Once the data is in an event source platform such as Kafka, it can be selectively and securely replicated between physical clusters. Streams on the factory floor can be replicated to the local data center for applications to process data there without direct access to the floor network. Similarly, data can be replicated to the cloud for yet other applications to process. The flow of streaming data can be replicated in both directions.